Multiple studies suggest that students think that Generative Artificial Intelligence (GenAI) tools are helpful, and they do not think that they over-rely on them, but how accurate are these thoughts? “The Widening Gap: The Benefits and Harms of Generative AI for Novice Programmers,” an ICER 2024 paper by James Prather, Brent N Reeves, Juho Leinonen, Stephen MacNeil, Arisoa S. Randrianasolo, Brett A. Becker, Bailey Kimmel, Jared Wright, and Ben Briggs, examines this question.

In short, for some students, GenAI is not only not helpful, but potentially an impediment to their education. Here’s an excerpt from the abstract:

Some students who did not struggle were able to use GenAI to accelerate, creating code they already intended to make, and were able to ignore unhelpful or incorrect inline code suggestions. But for students who struggled, our findings indicate that previously known metacognitive difficulties persist, and that GenAI unfortunately can compound them and even introduce new metacognitive difficulties.

In other words, when these researchers observed how students actually use GenAI tools, they discovered that GenAI helps some students and hurts others.

Experimental setup

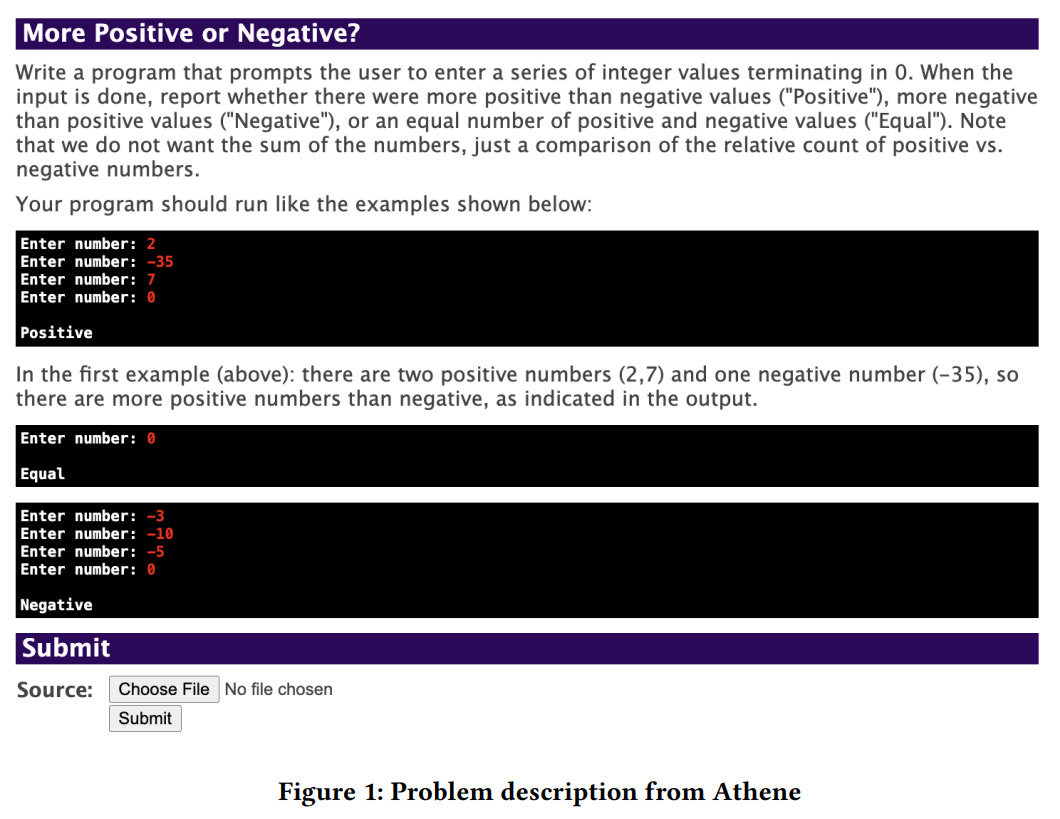

There were 27 students enrolled in a CS1 course at a small research university in the USA, and 21 of them opted into the study for extra credit. These students were asked to solve a programming problem (see figure below) in 35 minutes, and they were given access to both GitHub Copilot and ChatGPT. As each participant worked on the problem, they verbalized their thoughts, Tobii tracked their eye movements, and researchers took notes. Afterwards, the researchers asked each participant questions about their perceptions of AI (e.g., how helpful it was during the problem solving), prior programming experience, and a few other things.

Every student, except one, completed a working program within the time limit; the average completion time was 17.1 minutes with a standard deviation of 8.1. This sounds like a positive outcome — the success rate was over 95% — but the picture isn’t so clear when we take a closer look.

Some students struggled

The researchers made a list of eight metacognitive difficulties. These included five from prior work (ICER 2018, before the advent of GenAI), and three new difficulties centered around participants’ use of GenAI. Ten participants exhibited at least one metacognitive difficulty; here are a few examples:

Participant 1’s code wouldn’t compile. After struggling and getting interrupted by Copilot for a while, they eventually said, “Okay, I’m using ChatGPT now. I love ChatGPT. It’s so helpful.” Subsequently, even though ChatGPT’s suggestions were “wildly different” from their own code, they stuck to their solution. This was an example of the Achievement metacognitive difficulty (“Unwillingness to abandon a wrong solution due to a false sense of being nearly done”).

Participant 7 turned to ChatGPT much earlier in the process (“Woah, interesting. The first thing I’ll do is pull up ChatGPT”), and after a few hiccups, they made substantial progress toward a correct solution. But since they “struggled to fix basic errors” while working, they exhibited a Progression difficulty (“Being conceptually behind in the course material but unaware of it due to a false sense of confidence”).

Participant 11 accepted a suggestion from Copilot even though the suggested line contained a variable that had not been declared; thus, the participant exhibited a Mislead difficulty (“The tool leads the user down the wrong path”). Later, incorrect suggestions from ChatGPT further contributed to the participant’s confusion.

If it were simply the case that some participants struggled while others did not, then that would be business as usual. After all, I believe that the primary purpose of CS education research is to help students in their endeavors.

But GenAI, with its increasing availability and capabilities, introduces complications. In particular, as the paper puts it, GenAI creates an “illusion of competence” and a contradiction between students’ expressed beliefs and their behavior. That is, some students who had struggled claimed that they didn’t really need help from Copilot/ChatGPT, but as far as the authors could tell, they did.

A paper from ICER 2018 (mentioned earlier) describes the results of a similar experiment. There, 11 out of 31 participants did not solve the programming problem within 35 minutes, so the success rate was less than 65%. However, all 11 participants realized that they could not solve the problem after the allotted time. In contrast, according to the authors of the 2024 paper, participants’ ability to assess their understanding was impeded by GenAI: “From the evidence presented above, it appears that most of these ten who struggled thought they understood more than they actually did.” So GenAI might help struggling students in the short run while hampering their metacognitive abilities, which could be detrimental in the long run.

(Of course, it’s possible that the students were fully aware of how reliant they were on GenAI and did not want to openly express themselves. But even if that were the case, it’s probably harder for educators to help struggling students if everyone appears to be doing fine.)

Others had more success

Eleven of the 21 participants did not exhibit any metacognitive difficulties. Participant 19 finished in just 5 minutes; when they were asked if Copilot had been helpful, they responded, “Definitely sped it up. It gives you a lot of the scaffolding you need to solve the problem so you don’t have to worry about some of that.”

Importantly, these participants were able to ignore incorrect suggestions. This relates to a 1994 paper by Marvin Minsky cited by the authors, in which he wrote, “In order to think effectively, we must ‘know’ a good deal about what not to think! Otherwise we get bad ideas — and also, take too long.” Accordingly, the authors suggest the “ability to ignore incorrect or unhelpful GenAI suggestions” as an important skill for novices to develop.

Alternative GenAI tools

Since ChatGPT and Copilot can hinder students’ learning, and banning GenAI is impractical, the authors point to a few “novice-friendly tools” that expose students to GenAI “in scaffolded ways” (e.g., Prompt Problems, CodeHelp, CodeAid, Ivie). They also propose explicitly teaching metacognitive behaviors and skills.

Perhaps someday, it will seem clear that GenAI broadly improves educational outcomes. But as the paper suggests, we should be cognizant of its effects on everyone, including the students who appear to benefit from it.